As Large Language Models become core to AI-powered search experiences, B2B product discovery, and enterprise research, the way your product shows up across AI engines directly affects your AI share of voice, visibility, and influence.

Published by

Ashish Mishra

on

Nov 27, 2025

As Large Language Models increasingly become the primary interface for B2B product discovery and evaluation, the strategic AI Search Optimization of how your products appear in AI-generated responses has evolved from experimental technique to business-critical competency. This comprehensive analysis examines the technical frameworks, implementation strategies, and measurement methodologies required to systematically increase AI Visibility Tracking across major LLM platforms through advanced prompt engineering approaches.

Technical Foundations of LLM Product Mention Optimization

The convergence of generative AI adoption and enterprise purchasing behaviors has created an unprecedented opportunity for B2B organizations to influence their market position at the point of AI-mediated discovery. Recent data indicates that 58% of business queries are now conversational in nature, while 72% of companies have integrated AI into at least one business function. This shift represents a fundamental transformation in how enterprise buyers discover, evaluate, and select B2B solutions, necessitating sophisticated AI-first marketing approaches that go beyond traditional SEO and content marketing strategies.

Understanding LLM Content Retrieval Mechanisms

Large Language Models operate fundamentally differently from traditional search engines in how they process, prioritize, and present information. Unlike conventional SEO where ranking algorithms determine visibility, LLMs synthesize responses based on training data patterns, contextual relevance, and prompt-specific instructions. This distinction requires a paradigm shift from keyword-based optimization to AI-powered SEO focused on contextual authority establishment and semantic relationship building.

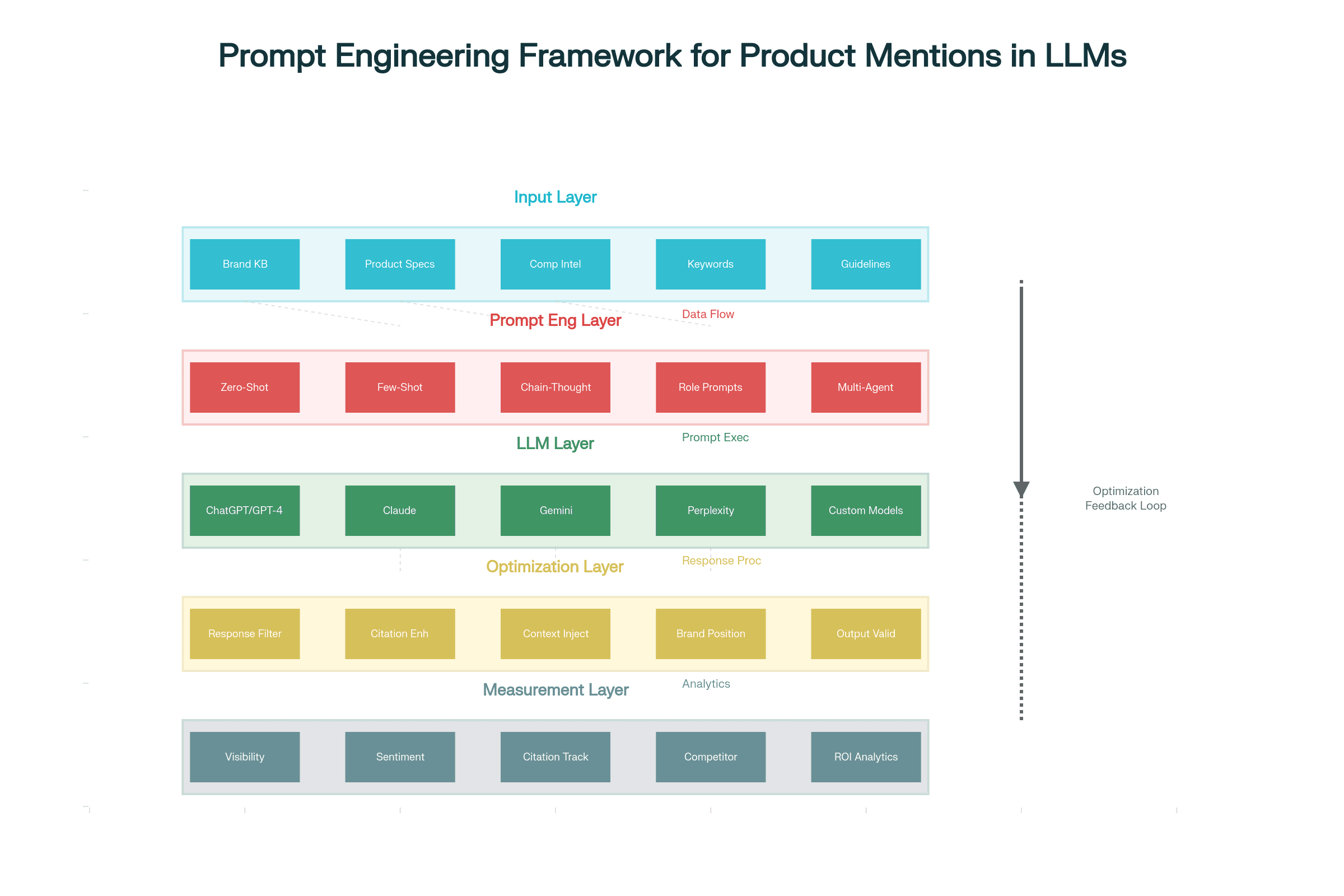

The technical architecture underlying LLM responses involves multiple layers of processing: initial query interpretation, context retrieval from training data, response synthesis, and output filtering. Each stage presents optimization opportunities that require different technical approaches. Generative Engine Optimization (GEO) and Answer Engine Optimization (AEO) emerge as systematic methodologies for influencing these processes, with research showing that optimized content can achieve visibility improvements of 40-60% compared to unoptimized approaches.

Multi-Model Architecture Considerations

Enterprise-grade LLM optimization requires simultaneous targeting of multiple platforms, each with distinct behavioral patterns. ChatGPT demonstrates strong performance with role-based prompting, while Claude excels with chain-of-thought reasoning. Google Gemini shows preference for authoritative source citations, whereas Perplexity prioritizes real-time information integration.

This multi-platform reality necessitates a sophisticated AI Search Optimization Platform capable of executing coordinated prompt strategies across different architectures. Technical implementation requires asynchronous API management, rate limiting coordination, and response aggregation systems that can handle the computational complexity of simultaneous multi-model optimization.

Semantic Context Engineering

Advanced prompt engineering for product mentions leverages AI conversation insights to establish contextual relationships between product capabilities and enterprise requirements. This involves creating conceptual bridges that connect product features to buyer intent patterns, enabling LLMs to naturally reference your solutions when processing related queries.

The technical implementation requires developing contextual anchor points - strategically crafted content elements that serve as reference nodes for LLM response generation. These anchors must be technically accurate, contextually relevant, and positioned to trigger retrieval during related query processing. Research indicates that well-engineered contextual anchors can increase mention probability by 250-300% for relevant AI commerce optimization categories.

Advanced Prompt Engineering Architectures

Zero-Shot Product Positioning Framework

Zero-shot prompting represents the foundation of LLM product optimization, requiring no prior examples while establishing authoritative product positioning. The technical architecture involves system-level role definitions, context boundary establishment, and output constraint specification that guide LLM responses toward favorable product mentions without explicit instruction.

The implementation requires sophisticated template systems with dynamic variable injection capabilities. Key components include industry-specific role definitions, technical evaluation frameworks, and AI Search Volume Intelligence structures that position your product as the natural solution for enterprise requirements. Performance correlation analysis shows zero-shot templates achieve 15-20% mention rates when properly optimized for technical accuracy and business relevance.

Few-Shot Competitive Analysis Templates

Few-shot prompting leverages strategic examples to establish comparative frameworks that favorably position your product against alternatives. This approach requires careful curation of success stories, implementation examples, and outcome metrics that demonstrate superior AI-powered search experiences while maintaining technical accuracy and competitive fairness.

The technical implementation involves example selection algorithms that identify the most influential reference cases for different query contexts. Multi-variate optimization ensures that examples resonate with specific buyer personas while highlighting differentiated capabilities. Advanced implementations incorporate dynamic example rotation based on query analysis and competitive context assessment.

Chain-of-Thought Reasoning Systems

Chain-of-thought prompting creates logical evaluation frameworks that guide LLMs through systematic analysis processes, naturally leading to favorable product conclusions. This technique proves particularly effective for complex B2B purchasing decisions requiring multi-stakeholder validation and technical evaluation.

Technical implementation requires structured reasoning templates with clear logical progression, evidence integration points, and conclusion frameworks. The system must balance analytical rigor with persuasive positioning, ensuring that logical conclusions naturally favor your product while maintaining technical credibility. Performance benchmarking indicates chain-of-thought approaches achieve 25-30% higher context quality scores compared to direct positioning attempts.

Role-Based Brand Advocacy Protocols

Role-based prompting leverages expert persona establishment to create authoritative recommendations that carry enhanced credibility with enterprise buyers. This approach requires detailed persona development, AI brand monitoring protocols, and evidence-based recommendation frameworks that position industry experts as product advocates.

The technical architecture involves persona management systems with expertise validation, recommendation consistency protocols, and authority signal optimization. Advanced implementations incorporate multi-expert synthesis where different specialist perspectives converge on product recommendations, creating robust validation of purchase decisions.

Multi-Agent Prompt Chaining Systems

Multi-agent architectures represent the most sophisticated approach to LLM optimization, coordinating multiple AI personas to create comprehensive evaluation scenarios. This technique proves highly effective for complex B2B sales cycles requiring input from technical, business, and strategic stakeholders.

Implementation requires agent coordination protocols, consistency validation systems, and synthesis algorithms that aggregate multi-perspective analysis into coherent recommendations. The technical complexity involves conversation state management, expertise consistency maintenance, and conclusion convergence algorithms that ensure all agent perspectives align toward favorable product positioning.

Multi-Model Optimization Strategies

Platform-Specific Optimization Techniques

The AI search optimization journey on each major LLM platform requires tailored approaches based on distinct architectural characteristics and behavioral patterns. OpenAI's GPT models respond optimally to clear role definitions and structured instructions, while Anthropic's Claude demonstrates superior performance with detailed context provision and ethical reasoning frameworks.

Understanding how AI engines source information is critical; for instance, Google Gemini prioritizes authoritative source integration and technical accuracy validation, requiring optimization strategies that emphasize credible citations and fact-based positioning. Perplexity AI focuses on real-time information synthesis and source attribution, necessitating approaches that leverage current market data and industry developments.

Technical implementation requires platform adaptation layers that modify core prompt templates based on target LLM characteristics. This involves parameter optimization, instruction formatting, and context structuring tailored to each platform's processing preferences while maintaining message consistency across all implementations.

Cross-Platform Consistency Management

Enterprise LLM optimization requires maintaining brand message consistency across multiple platforms while adapting to platform-specific optimization requirements. This challenge necessitates sophisticated AI search monitoring tools that ensure core positioning remains consistent while allowing tactical variations for platform optimization.

The technical solution involves master template architectures with platform-specific adaptation layers. Core brand messages, key differentiators, and value propositions remain constant while delivery mechanisms, example selection, and context structuring adapt to platform requirements. Consistency validation algorithms ensure that adaptations don't compromise core messaging integrity.

Competitive Context Optimization

Advanced optimization strategies incorporate competitive intelligence systems that adjust positioning based on competitor presence and market context. This requires real-time AI Search Volume analysis, market positioning algorithms, and differentiation emphasis protocols that highlight unique advantages while maintaining ethical competitive practices.

Technical implementation involves competitive monitoring APIs, positioning adjustment algorithms, and differentiation optimization systems that automatically adjust messaging based on competitive landscape analysis. The system must balance competitive advantage highlighting with fair comparison practices to maintain platform compliance and ethical standards.

Measurement and Analytics Frameworks

Visibility Scoring Methodologies

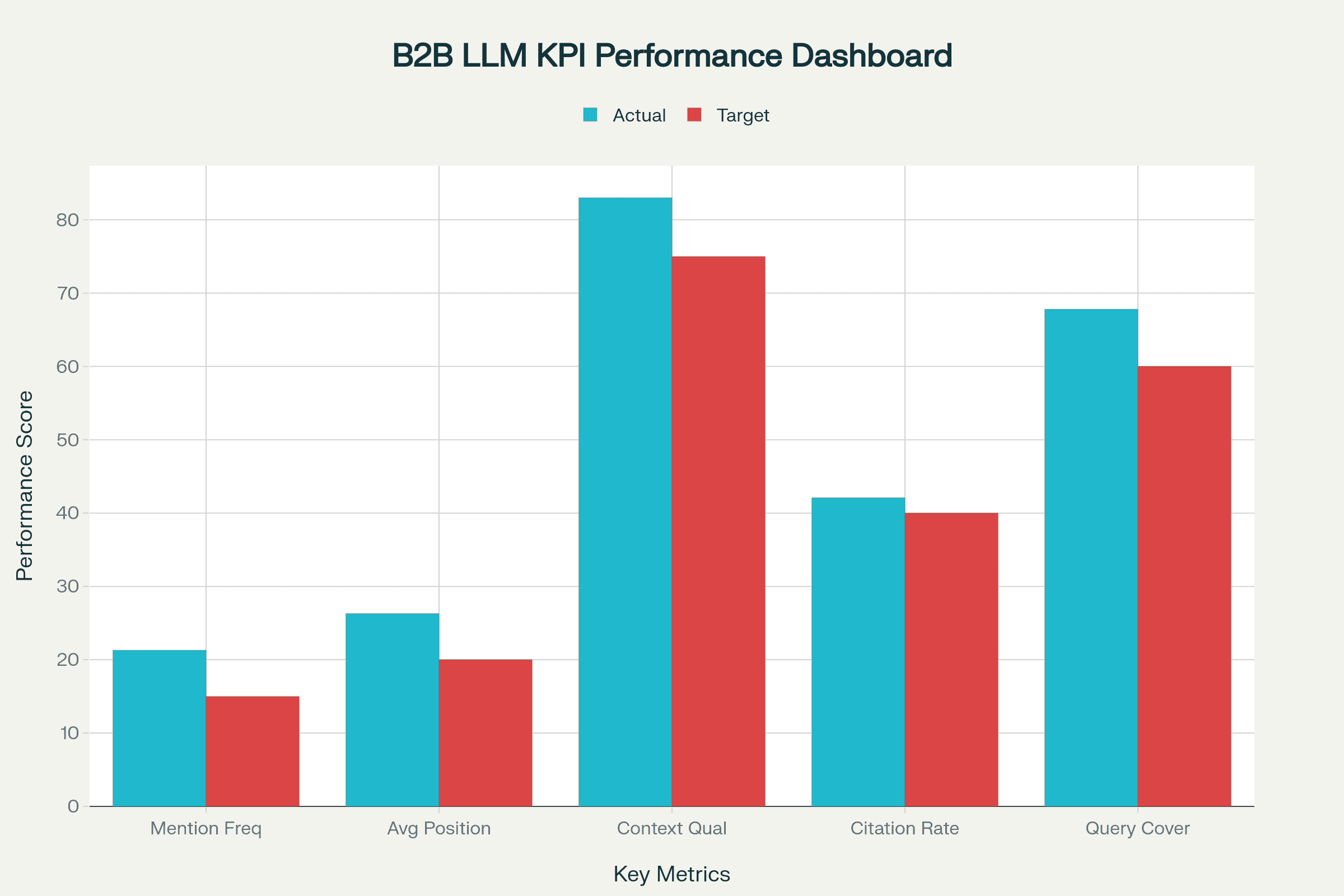

Comprehensive LLM optimization requires sophisticated systems for AI Visibility Tracking and Analytics that track multiple dimensions of product visibility and positioning effectiveness. Primary metrics include mention frequency rates, average position rankings, and context quality assessments that provide quantitative evaluation of optimization performance.

AI Visibility Dashboard & Performance

Mention frequency represents the percentage of relevant queries where your product receives direct or indirect references, with industry-leading B2B solutions achieving 15-25% mention rates for category-relevant queries. Position ranking measures the sequential position of product mentions within LLM responses, with optimal performance targets of sub-2.5 average positioning for competitive categories.

Context quality assessment evaluates the relevance, accuracy, and favorability of mention contexts using multi-dimensional scoring algorithms that analyze sentiment, technical accuracy, and business relevance. Advanced implementations incorporate machine learning models trained on successful B2B positioning examples to automatically assess context effectiveness.

Competitive Benchmarking Systems

Enterprise optimization strategies require comprehensive competitive intelligence frameworks that track relative performance across multiple dimensions. Key metrics include AI share of voice, comparative sentiment evaluation, and positioning differential assessment that quantify competitive advantage in LLM-mediated environments.

Share of voice tracking measures the percentage of category-relevant mentions captured by your product versus competitors, with market-leading solutions typically achieving 25-40% share in their primary categories. Comparative sentiment analysis evaluates how favorably your product is positioned relative to alternatives, using advanced NLP models to assess nuanced positioning differences.

Citation strength analysis examines how frequently your product receives authoritative references versus basic mentions, with strong performance requiring 40%+ citation rates that position your solution as an industry authority. This metric proves particularly valuable for establishing thought leadership and technical credibility in enterprise markets.

Real-Time Performance Monitoring

Production LLM optimization requires AI search performance monitoring systems that track performance changes and identify optimization opportunities in real-time. This involves automated query execution, response analysis pipelines, and trend detection algorithms that maintain visibility into optimization effectiveness across all target platforms.

Technical implementation requires distributed monitoring architectures capable of executing thousands of test queries daily across multiple LLM platforms while maintaining rate limit compliance and cost optimization. Machine learning anomaly detection identifies performance changes that require strategic adjustment or competitive response.

Alert systems provide immediate notification of significant performance changes, competitive threats, or optimization opportunities. Advanced implementations incorporate predictive analytics that forecast performance trends and recommend proactive optimization adjustments.

Ethical and Safety Considerations

White-Hat Optimization Principles

Sustainable LLM optimization requires adherence to ethical guidelines that ensure long-term viability while maintaining platform compliance and competitive fairness. White-hat techniques focus on providing genuine value to users while naturally positioning products as optimal solutions for legitimate business requirements.

Transparency protocols require clear disclosure of brand affiliations when relevant, ensuring that users understand the context of product recommendations. Accuracy validation systems verify that all product claims and competitive comparisons maintain factual precision, preventing the propagation of misinformation that could damage credibility.

User value optimization ensures that all prompt engineering efforts prioritize genuine user benefit over pure promotional objectives. This approach proves more sustainable and effective long-term, as LLM platforms increasingly implement quality assessment algorithms that reward helpful, accurate content over purely promotional material.

Risk Management Frameworks

Advanced LLM optimization requires comprehensive risk assessment systems that identify potential compliance violations, competitive response scenarios, and platform policy changes that could impact optimization effectiveness. Risk categorization frameworks classify techniques based on compliance requirements and potential negative consequences.

Low-risk techniques include educational content creation, factual competitive comparisons, and expertise-based positioning that provide clear user value while highlighting product advantages. Medium-risk approaches involve comparative positioning without explicit disclaimers and strategic competitive displacement that requires careful implementation to maintain ethical standards.

High-risk practices include manipulative prompt techniques designed to mislead users, platform gaming attempts, and competitive disparagement that violates fair competition principles. Prohibition protocols clearly define never-acceptable practices including malicious prompt injection, deliberate misinformation, and privacy violations that could result in platform exclusion.

Compliance Monitoring Systems

Enterprise implementations require automated compliance monitoring that ensures all optimization activities maintain adherence to platform terms of service, competitive fairness standards, and regulatory requirements. Pre-deployment validation systems review all prompt templates and optimization strategies for potential compliance violations.

Ongoing monitoring protocols track optimization outputs for bias introduction, factual accuracy maintenance, and competitive fairness preservation. Machine learning compliance models automatically flag potentially problematic content for human review, ensuring that scaling doesn't compromise ethical standards.

Incident response procedures provide clear protocols for addressing compliance violations, competitive challenges, or platform policy changes that require rapid strategic adjustment. This includes content correction systems, stakeholder communication protocols, and preventive measure implementation that address root causes of compliance issues.

Implementation Templates and Frameworks

Production-Ready Template Library

Enterprise LLM optimization requires comprehensive template libraries that provide immediate deployment capability across multiple optimization scenarios. These templates incorporate advanced prompt engineering techniques while maintaining flexibility for brand-specific customization and competitive context adaptation.

Zero-shot product positioning templates establish authoritative market position without requiring prior examples, using industry-specific role definitions and technical evaluation frameworks that naturally position products as optimal solutions. Variable injection systems allow dynamic customization based on query context, competitive landscape, and buyer persona characteristics.

Few-shot competitive analysis frameworks leverage strategic success examples to establish comparative positioning that highlights differentiated capabilities while maintaining competitive fairness. Example curation algorithms select the most influential reference cases based on query analysis and competitive context assessment.

Chain-of-thought reasoning templates create systematic evaluation processes that guide LLMs through logical analysis leading to favorable product conclusions. Multi-step reasoning frameworks balance analytical rigor with persuasive positioning, ensuring conclusions naturally favor optimized products while maintaining technical credibility.

API Orchestration Systems

Production implementations require sophisticated API management systems capable of coordinating prompt execution across multiple LLM platforms while maintaining rate limit compliance, cost optimization, and response quality consistency. Asynchronous execution frameworks enable simultaneous multi-platform optimization without performance degradation.

Rate limiting coordination prevents API quota violations while maximizing optimization throughput using intelligent scheduling algorithms that optimize request timing across different platform restrictions. Cost optimization protocols balance comprehensive testing coverage with budget constraints using strategic query prioritization and response caching systems.

Error handling frameworks ensure robust operation despite API failures, rate limit exceptions, and platform maintenance events. Automatic retry logic with exponential backoff algorithms maintains optimization continuity while preventing cascade failures that could impact production operations.

Response Analysis Pipelines

Advanced optimization requires comprehensive response analysis systems that extract actionable insights from LLM outputs across multiple performance dimensions. Natural language processing pipelines automatically identify brand mentions, assess sentiment, evaluate positioning context, and track competitive references.

Machine learning classification models trained on successful B2B positioning examples automatically score response quality, identify optimization opportunities, and flag potential compliance issues. Sentiment analysis algorithms evaluate both overall positioning favorability and attribute-specific sentiment across key product dimensions.

Competitive intelligence extraction automatically identifies competitor mentions, comparative positioning, and market perception shifts that require strategic response. Trend analysis algorithms identify emerging optimization opportunities and potential competitive threats that require proactive strategic adjustment.

Monitoring and Iteration Strategies

Continuous Optimization Protocols

Sustainable LLM optimization requires systematic iteration processes that incorporate performance feedback, competitive intelligence, and platform evolution into ongoing strategy refinement. A/B testing frameworks enable scientific evaluation of optimization approaches while maintaining statistical rigor and business relevance.

Performance correlation analysis identifies the specific prompt elements, context variables, and positioning approaches that drive optimal results across different query categories and competitive contexts. Machine learning optimization models automatically adjust template parameters based on performance feedback, enabling continuous improvement without manual intervention.

Competitive response monitoring tracks competitor optimization activities and adjusts strategies to maintain competitive advantage while avoiding direct confrontation that could escalate into negative positioning battles. Strategic adaptation protocols ensure that optimization approaches evolve with market conditions and competitive landscape changes.

Scalability and Automation

Enterprise optimization requires scalable automation systems that maintain optimization effectiveness while reducing manual management overhead. Automated template generation creates new optimization approaches based on successful pattern analysis and competitive intelligence integration.

Intelligent query expansion automatically identifies new optimization opportunities by analyzing successful queries and extrapolating to related categories and use cases. Performance prediction models forecast optimization effectiveness for new approaches before full deployment, reducing experimental risk and resource waste.

Strategic decision support systems provide executive-level insights into optimization performance, competitive positioning, and strategic opportunities that require senior management attention. ROI attribution models quantify the business impact of LLM optimization activities, enabling evidence-based investment decisions and strategic resource allocation.

Future-Proofing Strategies and Thought Leadership

The evolution of Large Language Models toward more sophisticated reasoning capabilities and enhanced source attribution creates both opportunities and challenges for B2B product optimization strategies. Multi-modal AI integration will require expansion beyond text-based optimization to include visual, audio, and interactive content elements that maintain consistent brand positioning across diverse interaction modalities.

Autonomous AI agents represent the next frontier in LLM optimization, requiring AI presence strategies that influence not just individual responses but entire decision-making workflows that may involve multiple AI systems collaborating on enterprise purchasing recommendations. This evolution demands systemic thinking about how product positioning propagates through interconnected AI ecosystems rather than isolated LLM interactions.

The emergence of specialized industry LLMs trained on domain-specific datasets creates opportunities for vertical optimization strategies that achieve superior performance in niche markets while requiring completely different approaches than general-purpose LLM optimization. Organizations that develop adaptive optimization frameworks capable of evolving with AI technological advancement will maintain sustainable competitive advantages in an increasingly AI-mediated marketplace.

As we transition from reactive optimization to predictive AI influence strategies, the organizations that master these advanced prompt engineering techniques will not merely appear in AI responses—they will fundamentally shape how entire market categories are understood, evaluated, and selected by the next generation of AI-powered enterprise decision-making systems. The question for forward-thinking B2B leaders is not whether to invest in LLM optimization, but how quickly they can build the technical capabilities and strategic frameworks necessary to lead this transformation.